Most modern processors allow their clock frequency to be scaled with demand: when CPU load is low, the clock speed is reduced, saving a considerable amount of energy. When demand is higher, it's increased, so performance should be unaffected. In theory.

Most modern processors also have multiple cores, allowing parallel execution of tasks, and increasing performance over a single-core processor of the same clock speed.

However, some workloads only make use of a single core - sometimes by their nature, sometimes because of the way they're implemented. On a 4-core CPU, a single-threaded task will consume 25% of the CPU - one of the four cores. This is where the problem starts. It seems that, at least on Windows Server 2012 R2, such a task will not cause the frequency to be scaled up, despite the task being CPU-constrained.

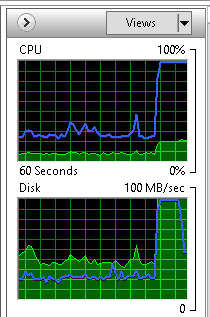

You can force the CPU to always run a maximum speed by using the "high performance" power plan, but this comes at the cost of increased energy consumption and heat generation. Here's an example:

Throughout the time of that graph, VMWare's OVFTool is extracting a virtual machine image. It's a CPU-bound operation, and single-threaded. The blue line on the CPU graph shows frequency; the green usage (as a proportion of maximum over four cores running at maximum frequency).

Approximately 75% through that period, I switched to the high performance power plan. This scaled frequency to maximum, and the result is clear: CPU use increases (because the single thread is now running at a higher clock frequency), and so does disk throughput. The task is now able to complete its work roughly three times faster.

It seems a little more intelligence in the frequency governor wouldn't go amiss for single-threaded workloads where clock frequency rather than the degree of parallelism is the main determining factor.

comments powered by Disqus